- Published on

Beware of DBT Incremental Updates Against Snowflake External Tables

- Authors

- Name

- Danny Mican

Snowflake does not currently support sub query pruning which can have serious cost implications for DBT incremental updates against external tables. Careful care must be taken When using DBT incremental models to query against large partitioned external tables. This post shows how to address the incremental update by breaking the incremental query out of a subquery.

DBT Incremental models load data gradually. Each run focuses on a limited (i.e. incremental) dataset, opposed to a full data set. This requires using a query predicate to limit the dataset. This predicate is often based on event time. The DBT documentation shows the following example of loading data incrementally based on the last most recent item:

{{

config(

materialized='incremental'

)

}}

select

*,

my_slow_function(my_column)

from raw_app_data.events

{% if is_incremental() %}

-- this filter will only be applied on an incremental run

where event_time > (select max(event_time) from {{ this }})

{% endif %}

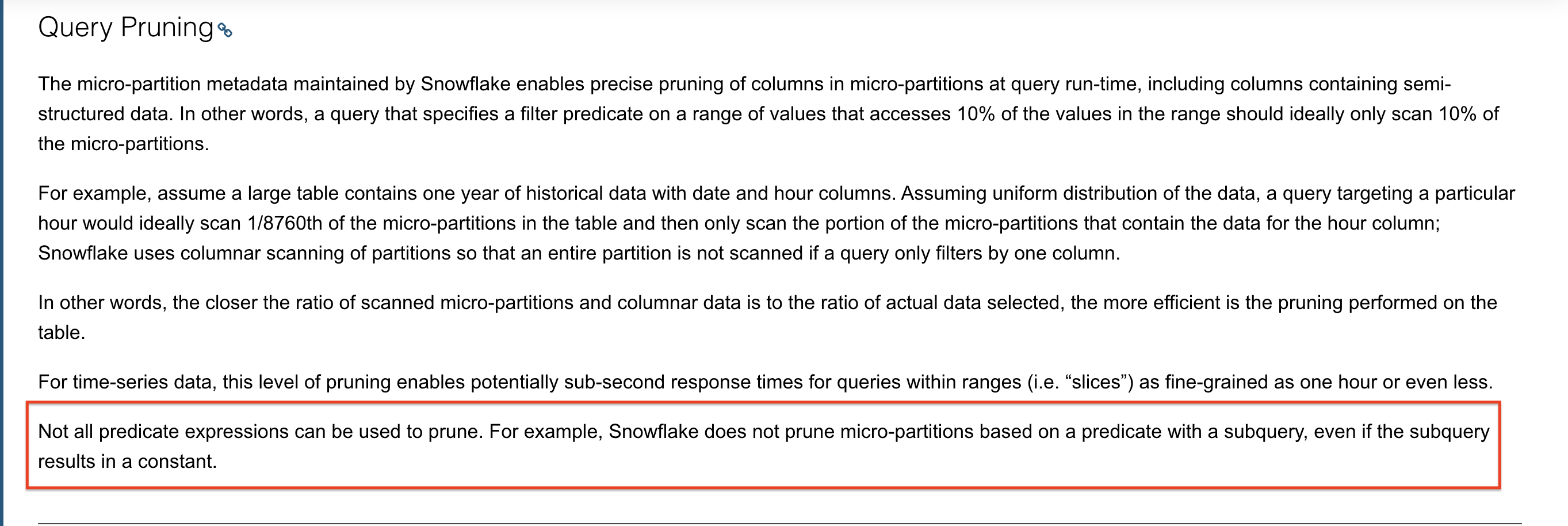

DO NOT USE A SUBQUERY WHEN QUERYING AGAINST A SNOWFLAKE EXTERNAL TABLE. The snowflake query planner is unable to leverage query pruning for sub-queries, even when the subquery returns a literal, which is the case of query above. From the snowflake docs:

Not all predicate expressions can be used to prune. For example, Snowflake does not prune micro-partitions based on a predicate with a subquery, even if the subquery results in a constant.

Instead:

- Execute the MAX(...) query as its own statement

- Pass the result as a literal to the incremental predicate

{{

config(

materialized='incremental'

)

}}

{% set query %}

SELECT max(event_time) FROM {{ this }};

{% endset %}

{% set max_event_time = run_query(query).columns[0][0] %}

select

*,

my_slow_function(my_column)

from raw_app_data.events

{% if is_incremental() %}

-- this filter will only be applied on an incremental run

where event_time > '{{ max_event_time }}'

{% endif %}

A macro based approach which handles compile is:

# macros/get_max_event_time.sql

{% macro get_max_event_time() %}

{% if execute and is_incremental() and env_var('ENABLE_MAX_EVENT_TIME_MACRO', '1') == '1' %}

{% set query %}

SELECT max(event_time) FROM {{ this }};

{% endset %}

{% set max_event_time = run_query(query).columns[0][0] %}

{% do return(max_event_time) %}

{% endif %}

{% endmacro %}

{{

config(

materialized='incremental'

)

}}

select

*,

my_slow_function(my_column)

from raw_app_data.events

{% if is_incremental() %}

-- this filter will only be applied on an incremental run

where event_time > '{{ get_max_event_time() }}'

{% endif %}

Profiling

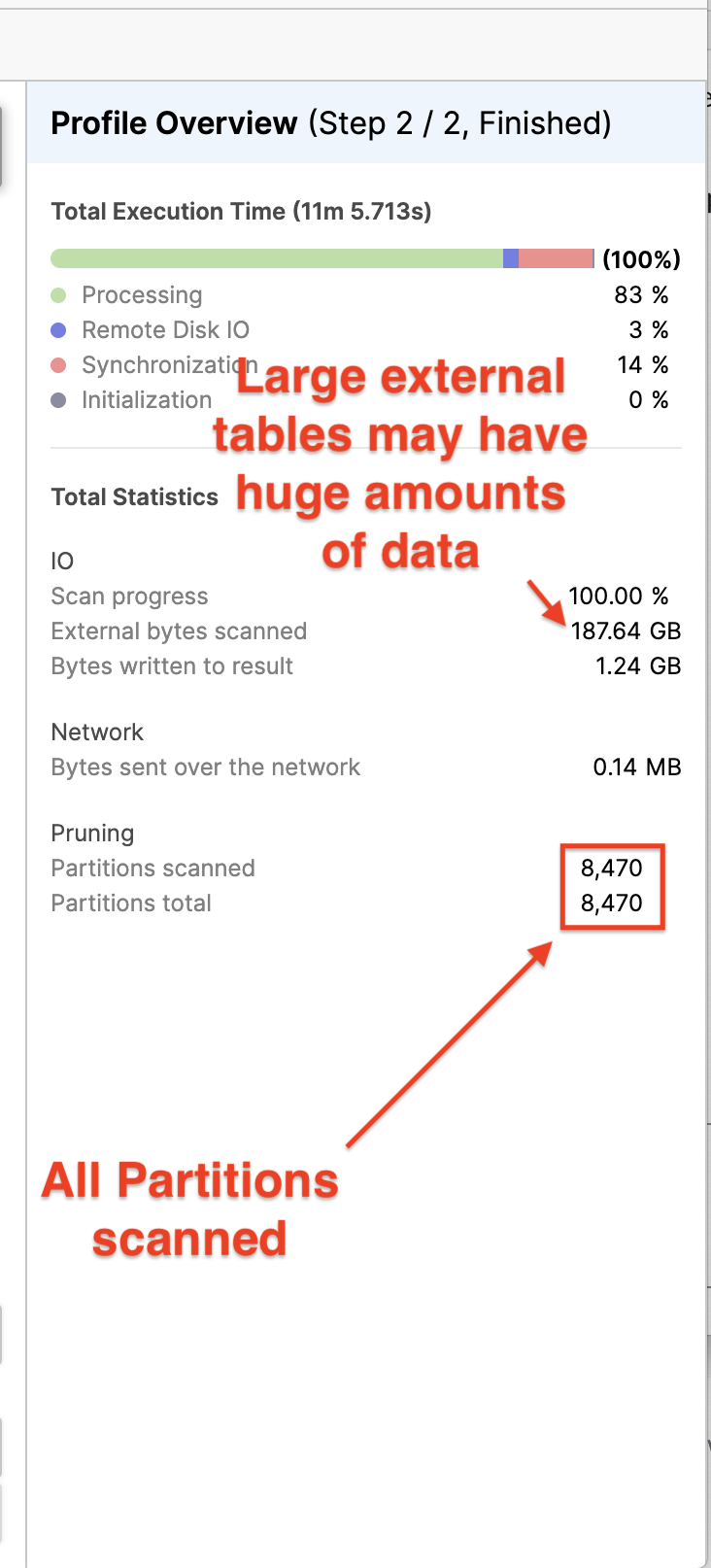

The following example illustrates the effect of subqueries on external tables and partition pruning. Using a subquery can have significant cost implications, because it performs a full table scan. The following query performs a subquery, to fetch the most recent records time:

SELECT

count(*)

FROM

the_external_table as tlog

WHERE

collector_hour >= (

SELECT

max(collector_hour)

FROM

other_table

);

Even though the subquery returns a literal, the outer query still scans every partition in the external table. The query profile for the statement above follows:

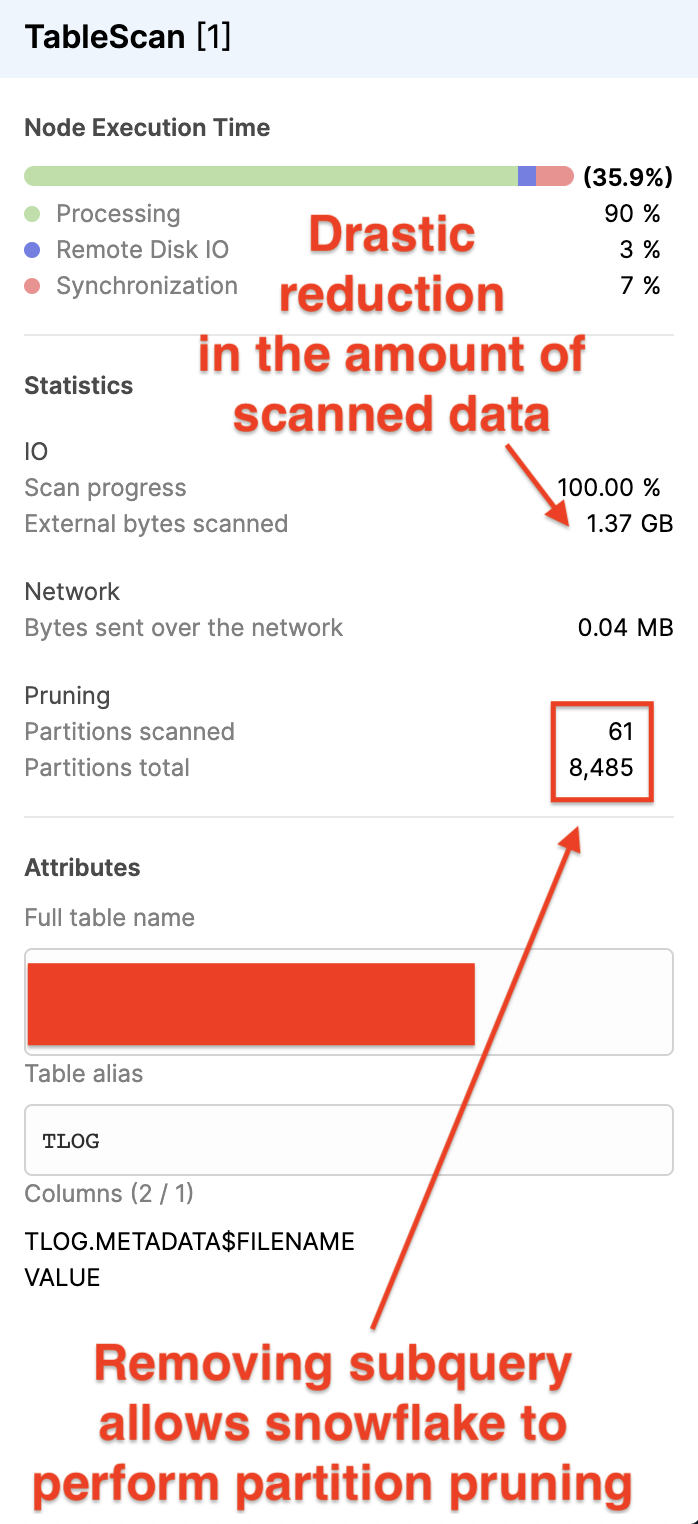

Following shows an example of removing the subquery and passing in the result as a literal:

$output = SELECT

max(collector_hour)

FROM other_table;

SELECT

count(*)

FROM

the_external_table as tlog

WHERE

collector_hour >= $output;

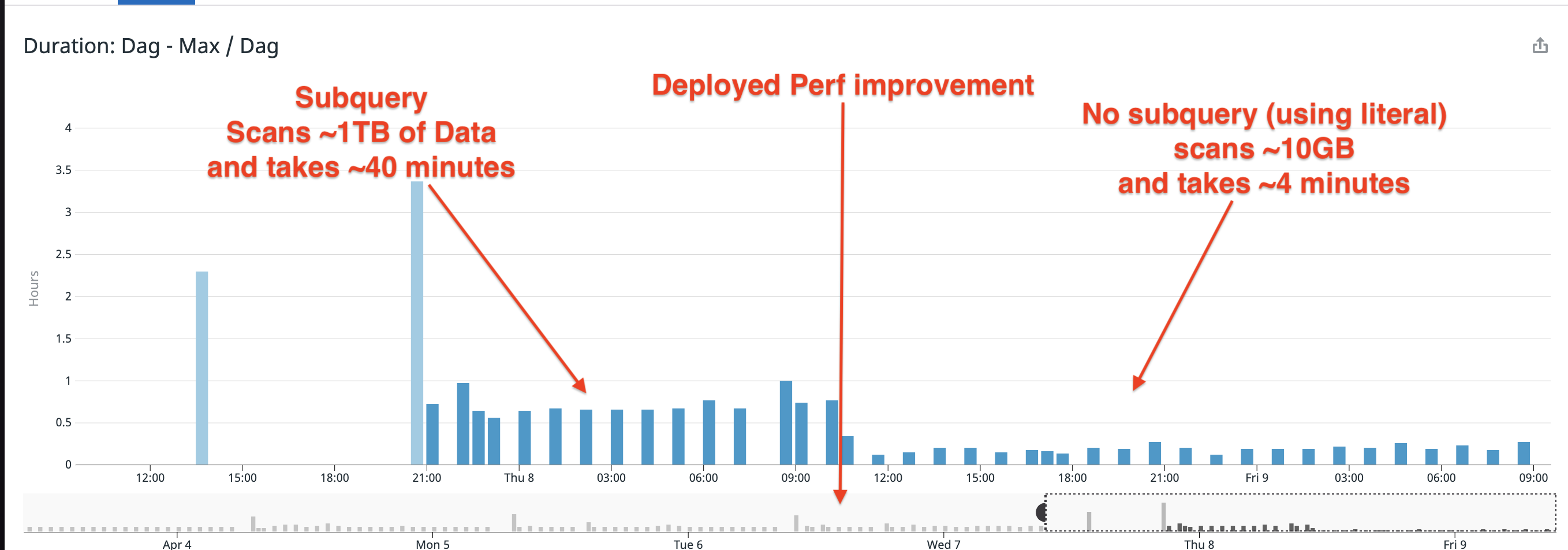

This has profound impact. The following shows the job duration of an external table with 1TB of metadata:

The job runs hourly. The duration was an hour using the subquery approach, and would scan ~1TB of external table metadata. The duration was reduced to 4 minutes when the subquery is removed.

Using this technique resulted in a 90% reduction in snowflake credits for queries against external tables!!