- Published on

Professional Software - The Unreasonable Effectiveness of Napkin Math Estimation

- Authors

- Name

- Danny Mican

Napkin math estimates are the anabolic steroids of software design. Napkin estimates are an extremely easy way to understand the scale of a problem space. Napkin math is a simple forumla to estimate load based on known data. Napkin math helps determine how much load a system will need to handle based on reasonable estimations and signals. Professional software design always includes napkin math calculations due to how easy and accurate they predict the load that software needs to handle.

Estimations

Imagine you're developing a new HTTP endpoint to load a user's configuration. Loading the configuration makes a call to the relational database backend (such as Postgres).

How many requests will the new feature generate?

You launch the feature and immediatly notice that the database load increased signficantly negatively affecting other production workloads. Napkin math can reasonably predict and help avoid situations like these. Napkin math forecasts and characterizes load using simple algebra and word problems. Don't be foolwed by napkin math's simplicity, learning how to make napkin math calculations can be the difference between software failure and success.

Napkin math estimates load by using real life data and documented, reasonable assumptions. Napkin math also bakes in a tunable error buffer to help model different load scenarios. The term napkin estimates comes from the ability to do these estimates on a back of a napkin with a pen. This is testament to how simple it is to perform these estimates.

Napkin math can be applied to the HTTP configuration scenario to estimate the load that the new configuration endpoint would put on the database.

In this scenario suppose there are:

- 100,000 Daily Active Users (DAU)

- 10 Admin requests per day per user

- 10% of admin requests will be to the new configuration endpoint

- 3 database queries per /config endpoint call

100,000 DAU

* 10 Admin requests / day

* 10% config requests (0.10)

* 3 db queries / request

/ (24 hours / day / 60 minutes / hour / 60 seconds / minute)

= ~4 requests / second to the database

~4 requests / second to the database

Napkin math easily estimates an increase of load of 4 requests per second on the production database. In this scenario, 4 requests / second are sufficient to impact production workloads. This calculation follows a simple pattern and can be performed by anyone, which is discussed in the next section.

Methodology

Napkin math estimations are unreasonably accurate because they are rooted in real data. Only four components are required with building napkin estimates:

- Anchor action

- Load factor

- Error estimation

- Unit Normalization

- Document assumptions

To illustrate, consider we've been tasked with building a centralized logging system. In a centralized logging system, all application logs are sent to a single system. Napkin math can be used to help estimate the amount of load the centralized logging system should expect.

Anchor Action

The first step in napkin math is determining an anchor action. An anchor action provides a reference point for an estimation. An anchor action is something that can be measured and has data currently available. Logs are emitted in response to user actions, i.e. web requests. Most of the time the anchor action will relate to a user action. This is because business software serve users by providing value. This puts users as the core driver to software load. A product with no users will probably have very little load, while a product with many users will have high load. In this case the anchor action would be a "user request".

Calculating the daily user load requires getting the daily active users (DAU) and the average number of requests each user makes in a day. This scenario will assume:

- 10,000 DAU

- ~5 requests / session

= 50,000 requests / day

Load Factor

The load factor represents the load on the new feature, in this case the logging system. Load is usually represented by the number of requests in an interval (such as requests per second) or the volume (bytes per second). For the logging example, it is estimated that each request generates ~3 log statements at ~1KB per statement. Load factors are an estimate, and require combining assumptions with data analysis.

In the case of the example, the number of log statements per routine can be counted (i.e. logger.$X). The output of each log statement could be sampled and the number of bytes counted to calculate a byte size estimate. It's important to estimate based on reasonable sample sizes. Counting the number of log statements in a single endpoint may be misleading while randomly sampling 10% of the endpoints and calculating the average number of calls provides a much better estimation of the load factor.

50,000 requests / day

* 3 log statements / request

= 150,000 log statements / day

Error Estimation

An error estimate is an uncertainity buffer. Napkin math provides estimates. Napkin math is so quick because it is a light weight estimate. An exact would require in depth data analysis and generation and would be much more time consuming. This means that napkin math makes a tradeoff along an accuracty/time spectrum.

Perfect accuracy would require a huge amount of time investment. No accuracy would require very little time estimate. This should be intuitive because we could throw out a random number in a couple seconds. This number would have very little meaning (it would be extremely inaccurate).

Napkin math exists in the middle. It can be complete in a couple of hours and provides a reasonable estimate.

An error estimation is a way to explicitly incorporate uncertainity into the napkin math estimation.

An error estimate of 20% is chosen for the logging scenario:

150,000 log statements / day

* 20% Error Buffer (1.20)

= 180,000 log statements / day adjusted

Representing the error estimation as its own coeffecient makes it easier to tune the value. It creates a configuration knob that can be tuned, substituting 10% or 30% is trivial and doesn't require reworking the original anchor action.

Unit Normalization

The final part of the estimation equation is unit normalization. Units should be normalized into easy to understand units. The unit should be chosen based on a whole number.

For example, ~2 requests / minute (ceil(1.2) requests / minute) should be favored over 0.02 requests / second. It's often easier to grasp whole things at a coarser interval than partial things at a fine grained interval. The other main reason for rounding up to whole numbers is because many software estimations are rooted in requests, and requests don't occur in partial intervals. A request either occurs or it doesn't occur, most of the time it doesn't make sense to consider partial requests.

Document Assumptions

Documenting assumptions explicitly calls out the sources of the anchor action and load factor. Documenting assumptions increases the accountability and the accuracy of the napkin estimation.

Documentation should include:

- Description of the data Methodology

- Links to queries that generated the data

- Screenshots

- Calls out any uncertainity or inaccuracies with the numbers

Documentation for the anchor action could look like:

- Result: 10,000 DAU

- Methodology: DAU were generated by quering the production database for the distinct number of user requests to any http endpoint. The daily counts were calculated for the last 2 weeks to make sure weekend traffic flows were considered. The average daily value of distinct users making requests was calculated using the 14 days of data.

- SQL: $PROVIDE THE SQL STATEMENT NECESSARY TO CALCULATE THIS

- Screenshot: Provides a screenshot of the sql results or a visual timeseries of the results.

Documentation gives the napkin estimation legitamacy by documenting the source of data and assumptions.

Putting it Together

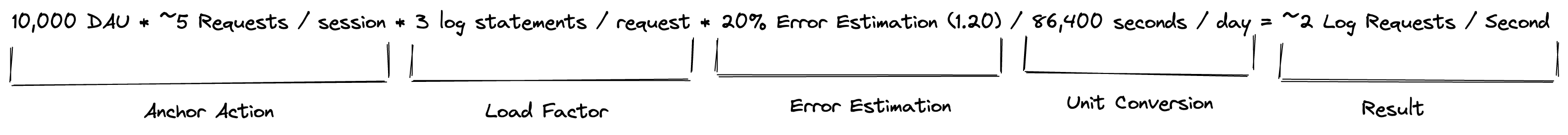

The following is the full napkin equation:

Each of the core components are called out. The final result estimates that the new centralized logging system will need to handle ~2 requests per second. This is critically important to inform the amount of load testing that needs to occur and helps shape system design. Each component and design decision in the logging system needs to accomodate this load. Napkin estimates provides data points to help shape a design and avoid project failure.